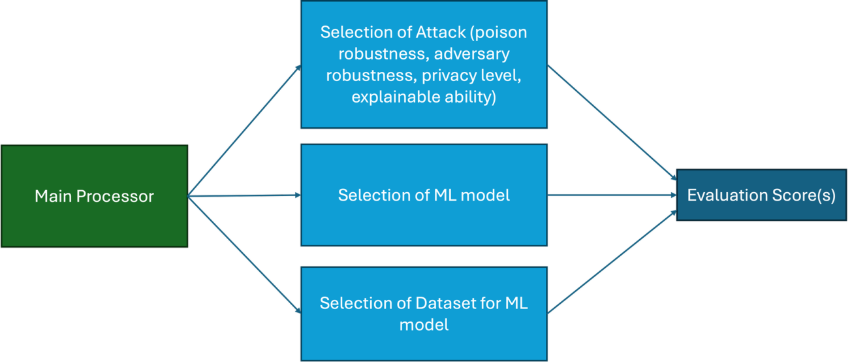

The development of the AI-SEC tool [1] is making steady progress. This tool is designed to systematically evaluate various security and trustworthiness properties of AI models in accordance with the AIC4 requirements [2]. The evaluation covers key aspects such as privacy protection, data poisoning resistance, adversarial robustness, and explainability. To ensure both comprehensive coverage and technical depth, we have extensively surveyed and integrated several state-of-the-art (SOTA) research methods, constructing a scalable and modular testing framework.

The core architecture of the tool and standardized metric definitions have now been completed. Each property category (e.g., adversarial robustness or explainability) includes carefully selected test cases, benchmark datasets, and reproducible, comparable result aggregation modules. The AI-SEC tool emphasizes transparency and auditability, ensuring compatibility with the AIC4 certification.

The next phase will focus on broadening the tool’s applicability to support a wider range of machine learning model types, including but not limited to those trained on NLP data, image data, and other modalities. This expansion aims to make the AI-SEC tool more versatile and adaptable across different AI application domains.

[1] https://git.code.tecnalia.dev/emerald/public/components/ai-sec